Nvidia took a bow for delivering the fastest platform for inference on generative AI in the latest industry-standard tests as part of MLPerf benchmarks released Wednesday. Meanwhile, Intel touted its Gaudi 2 accelerator as the only benchmarked alternative to Nvidia’s H100 for generative AI performance.

For the first time, ML Commons added Llama 2 70B to its inference benchmarking suite, MLPerf Inference 4.0. “In terms of model parameters, Llama 2 is a dramatic increase to the models in the inference suite,” said Mitchelle Rasquinha, co-chair of the MLPerf Inference working group.

MLPerf Inference 4.0 benchmarks included more than 8,500 performance results across 23 submitting organizations, including Nvidia, Intel, Google, Broadcom, Cisco, HPE, Juniper Networks, Oracle, Qualcomm, Supermicro and more.

Four companies also submitted data center-focused power numbers for MLPerf Inference 4.0, which evaluated power consumption captured while inference benchmarks were running. They were Dell, Fujitsu, Nvidia and Qualcomm.

Nvidia relied on TensorRT-LLM software to speed up inference when running on Nvidia Hopper GPUs on GPT-J LLM by 3x over a benchmark from six months ago. The company took the opportunity to tout its new NIM for inference microservices including TensorRT-LLM for businesses to use.

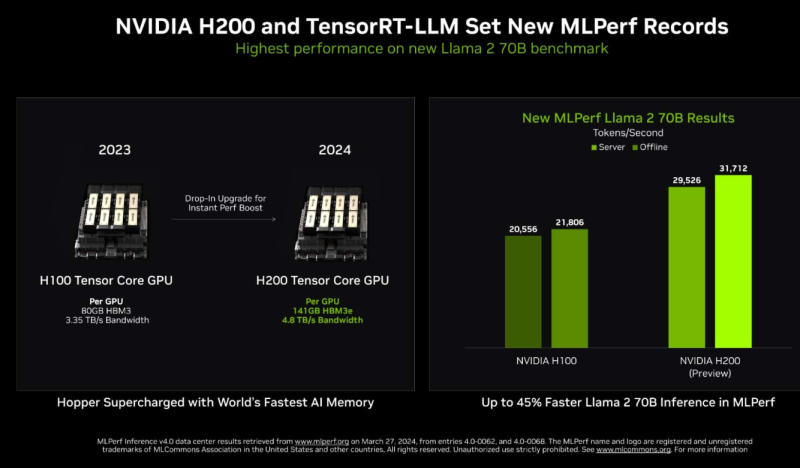

TensorRT-LLM running on memory-enhanced H200 GPUs produced up to 31,000 tokens per second, which set a record for a Llama 2 benchmark, Nvidia said. H200 GPUs are shipping and will soon be available from nearly 20 system builders and cloud providers. They include 141GB of HBM3e memory running at 4.8TB/second, which represent 76% more memory that runs 43% faster over the H100 GPUs. They use the same boards and software as H100 GPUs.

Intel also submitted Gaudi 2 accelerator results for Llama 2 70B which delivered 8035 offline tokens per second and 6287.5 server tokens kper second. Gaudi Llama results relied on the Hugging Fact Text Generation Interface. Intel Gaudi 2 accelerators and 5th Gen Xeon processors are available for evaluation in the Intel Developer Cloud.

RELATED: Blackwell platform puts Nvidia in higher realm for cost and energy