Intel has been taking on Nvidia’s dominance in GPUs used in generative AI and supercomputing tasks for months, an uphill climb by industry share for sure, but some recent benchmarks have been impressive.

In a preview to SC23, running this week in Denver, Ogi Brkic, GM of data center and AI/HPC compared Intel’s Gaudi2 Ai Accelerator to Nvidia’s H100. In a slide he presented, Intel called Gaudi2 the “only viable alternative to H100” and noted its price-performance advantage over H100 for GenAI and LLM. A recent MLPerf GPT-J inference benchmark showed Gaudi2 had “near-parity performance” to the Nvidia chip, Intel said.

While many of the claims of Gaudi2 have slowly emerged in recent months, Brkic boasted, “there’s huge demand for Gaudi right now; the ecosystem is growing.”

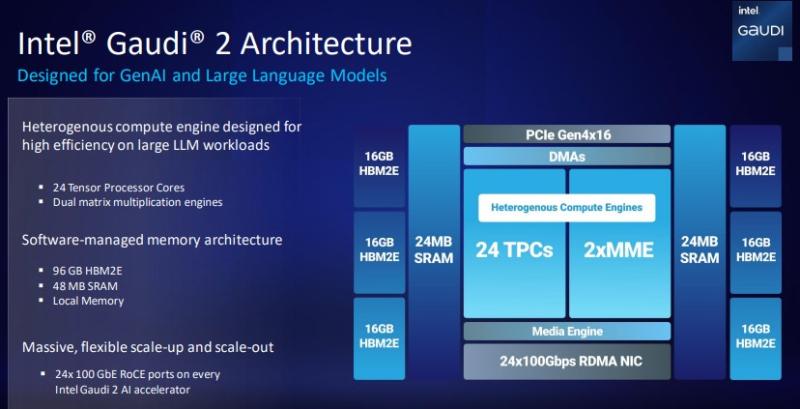

The Gaudi2 architecture includes 24 Tensor Processor Cores and a software-managed memory design with 96 GB HBM2E and 48 MB of SRAM.

Intel Gaudi 2 runs on a 7nm node while next generation Gaudi 3 will run on a 5nm note with double the networking capability and 1.5x HBM capacity. It is slated to appear in 2024.

Brkic also updated Intel’s accelerator and GPU roadmap, with the Intel Data Center GPU Max Series to be succeeded by its next gen GPU codenamed Falcon Shores, based on a modular tile-based design and standard Ethernet. It will feature converted Habana and Xe IP and a single GPUs programming interface.

Intel has previously announced its fifth generation Intel Xeon, code-named Emerald Rapids would ship in December and Brkic said the launch date is Dec. 14. The next Xeon, Granite Rapids, is on schedule to deliver in 2024, Intel said.

At SC23, Intel will show a variety of semiconductors and use cases. Brkic updated Intel’s work with research institutions using AI supercomputers. In one example, the Dawn supercomputer at University of Cambridge is being used to focus on healthcare, green fusion energy, and climate modeling. It runs with direct liquid cooling.

Dawn runs 512 4th Gen Intel Xeon and 1,024 Intel Data Center GPU Max Series for a total of 256 nodes.

Brkic also described Argonne National Labs’ Aurora supercomputer as the “largest GPU deployment we know of” with 60,000 Intel Data Center Series Max GPUs on 166 racks and 10,000 nodes. The system is devoted to a “Science GPT,” he said, wherein the system is trained with scientific research that can be used in high energy particle physics, drug discovery and more.

Across various industries, including energy and manufacturing, Brkic said Intel's GPU Max 1550 has shown 1.36 times better performance than Nvidia H100 PCIe.