Recently announced, AmazonGo is aiming to transform the ages-old brick-and-mortar retail experience. The official news broke via multiple channels, including a well-produced YouTube video showing shoppers entering a stylish grocery store. Central to the concept is the absence of any physical checkout system; shoppers check in upon arrival, and browse as they normally would.

Amazon says it uses a combination of computer vision, machine learning, and artificial intelligence (AI) to track users and items throughout the store. When a shopper picks a product, this array of in-store sensors and back-end analytics automatically tabulate the final bill (deducting it from their Amazon account, of course), allowing shoppers to be on their way, a.k.a., "Just walk out technology."

According to initial reports, Amazon has actually built a 1,800 ft² test site in one of its Seattle buildings. While today it is only open to Amazon employees, it says it may allow the public to shop in early 2017. To put the store's scope in perspective, it is the size of a modest home, whereas most of the US's 38,000 supermarkets are 50,000 ft² or more; more than 25 times the size of Amazon's store.

The Project Is Ambitious

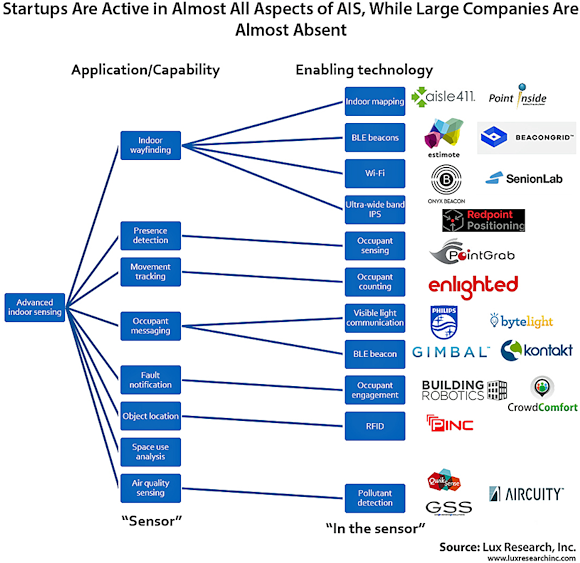

Though highly ambitious, no one piece of the project is remarkable, it's the system integration that makes it compelling. The retail segment of the market has been by far the earliest adopter of advanced indoor sensing (AIS) – a toolbox of technologies which allow user-centric interaction with buildings (see the Lux Research report, Advanced Indoor Sensing: The Next Frontier of the Built Environment).

This can range from people tracking, to messaging, indoor location, and more. Retail-focused startups like Aisle 411 and Point Inside have helped digitize thousands of retail stores, such as Walgreens and Toys R Us. They use a mix of beacons, electronic maps, and sensor fusion to help guide shoppers through a store, and ultimately increase basket size. The former is even working with Google's Project Tango to enable augmented reality (AR) capability in-store, to help shoppers navigate to a particular item, and display product information, with eerily high precision. Lowes is using it as well. Its "holoroom" allows customers to visualize furniture in their own homes.

Amazon can use all of these technologies, and more. However, there are some key technical details which are hugely relevant:

Sensor Fusion Possible, Yet Largely Unused.

While the idea of pulling data from shoppers' smartphones is often discussed, using it is difficult without running into privacy issues. Retailers that have used this capability to date rely on PII (personally identifiable information), by requiring shoppers to sign into a frequent shopper account, allowing them to track movement, purchasing history, etc.

This "check-in" is core to tracking users as they move through a store. It is highly likely Amazon is using a mix of Bluetooth beacons coupled with visible light communication (VLC) to enable location tracking throughout the store, although these technologies are both available in the market today from lighting companies such as Acuity Brands. There is no mention of RFID, and while it is possible that Amazon uses it, this concept does not depend on it.

Computer Vision Unproven

While several startups such as PointGrab have leveraged low-resolution video for sensing, few have used high-end computer vision outside of the residential segment. Residential security cameras which use computer vision for functions like facial recognition are still in development, or work poorly.

Costs are also high for the devices themselves, ranging from $250 to $400 per camera, as they require onboard processing capability. Assuming a 50-ft² radius, costs would balloon to $1.5 million per retail site for cameras alone – excluding sensors in the shelves, which would also be required. One industry CEO to whom we spoke told us that there will be edge cases that will be very challenging, and another Silicon Valley veteran proposed fingerprint scanning as a way to deal with this hurdle.

Next page

AI Makes Sense

The Amazon Go retail store embodies how AI can be implemented behind the scenes to solve specific problems and enable amazing innovations in the process. Amazon claims its new store uses "deep learning", a method which depends on large clean data sets (semi-supervised learning).

More startups have their fingers in AI than large companies.

It is unclear what the extent of Amazon's use of AI technology is at the moment, but the likening of its implementation to that of self-driving cars in their advertisement hints at some of the potential applications of deep learning in the Amazon Go store. For instance, the use of ConvNets is widely applied today to process imagery for tasks such as facial and object recognition, which can ultimately be used to monitor an individual as they navigate within the store and help detect any items that the user picks up. Moreover, one can easily see how tracking user purchase history can be used to supplement information obtained from sensors to better inform the system of what might have been picked up by the user.

Other possible applications of deep neural networks include using user purchase history as input data for recommendation engines to help suggest items of potential interest to customers, or even for anomaly detection, whereby suspicious user behavior can be detected to prevent theft. Overall, though the use of the terms "artificial intelligence" and "deep learning" may ultimately be marketing ploys, there are some very interesting foreseeable applications of deep learning in the Amazon Go ecosystem. And given that this news comes shortly after the announcement of Amazon AI, it is highly likely that Amazon has the tech to back up its words and successfully apply AI to make the digital grocery store of the future a reality.

Bottom Lines

Retailers are inherently secretive about the economics of their operations, but they are experimenting with these technologies because it offers operational improvements coupled with a better user experience.

Many AIS technologies do not have a clear ROI, and neither does the Amazon store. The key for Amazon will be for it to leverage these capabilities for adjacent use cases in the future, such as using robots to help restock shelves, delivering in-store personalized nutrition planning, and other future cases.

About the Author

Alex Herceg is an Analyst who leads the Intelligent Buildings research practice at Lux Research. He regularly analyzes technologies, strategies, and business models of emerging companies related to energy use in buildings. In this role he advises Lux's large corporate clients on issues related to innovation, strategy, investments and partnerships, and product development.

Related Stories

Artificial Intelligence Market Worth 16.06 Billion USD by 2022

New Sensor Technologies Accelerate Robot Evolution