Intel on Wednesday pegged its five-year total addressable market for data center silicon at $110 billion, a business double the TAM that the beleaguered chip giant described a year ago.

Generative AI workloads will push much of the compute Intel is talking about, even if the company is so far just a small part of the GPU market, which is heavily controlled by Nvidia (as much as 80%) and AMD (something less than 20%). There is still plenty of room for all the other things Intel does with CPUs, and more, to drive AI workloads, however, something at least one analyst is willing to tout.

“Intel is no longer willing to rest on its laurels and be out-competed by others,” said Jack Gold, an analyst at J. Gold Associates. “It is taking the battle directly to AMD and Nvidia as well as establishing a path to take on the custom cloud chips being put in place by hyperscalers, primarily based on ARM.”

Gold added that Intel is “getting very serious about filling the weak spots it had over the past couple of years in the data center, cloud and AI spaces…Those who have considered Intel a dead horse in the race need to do a reassessment.”

He was reacting to comments made during an Intel investor webinar on Wednesday led by Sandra Rivera, general manager of Intel’s data center and AI group. “When we talk about compute demand, we often look at the TAM through the lens of CPU units,” Rivera said. “However, counting sockets does not fully reflect how silicon innovations deliver value to the market. Today, innovations are delivered in several ways, including increased CPU core density, the use of accelerators built into the silicon and the use of discrete accelerators.”

Intel wrote in a blog after the event that the increased TAM is due to integration of accelerators and advanced GPUs into its data center business, allowing Intel to serve a “wider swath” of customers. Also the Xeon CPU for AI, analytics, security, networking and HPC is driving demand for mainstream compute.

“Intel is already foundational in AI hardware,” Intel said. Rivera added, “Customers want portability in their AI workloads. They want to build once and deploy anywhere. As we continue to deliver heterogeneous architectures for AI workloads, deploying them at scale will require software that makes it easy for developers to program and vibrant open and secure ecosystem to flourish.”

Gold conceded that Nvidia is a true leader in the high end of AI/ML with its H100 GPU. But he added that much of AI inference can be performed on a CPU, especially with AI accelerators built in. “That is the market that Intel wants to own with Xeon,” he said. Intel also has AMX and ML Boost AI-focused processing.

AMD does well in data centers with its Epyc server line, but “it currently can’t compete with Intel’s advantages in the AI inference space,” Gold said. And, Intel has competed effectively with Nvidia’s A100 systems in test results from Hugging Face with its Habana Gaudi 2 chips.( Hugging Face is a player in ML app development and this week announced it had enabled the 176 billion parameter BLOOMZ model on Habana’s Gaudi 2. https://huggingface.co/blog/habana-gaudi-2-bloom)

Rivera took note that Xeon is used in generative AI with Nvidia’s H100 GPUs to power virtual machines accelerating generative AI models in Azure, including ChatGPT. She specially mentioned the 4th Gen Xeon as the head-node to run alongside the H100s.

Intel has put cloud providers on notice, Gold added, that it will compete in the power efficiency space with its efficiency cores and Sierra Forest chips. They are a “direct challenge to Amazon Graviton and Google custom chip efforts,” Gold said. Both Amazon and Google want to offer power efficiency for lower power workloads “so they can charge less for their services.”

Projecting the chip roadmap

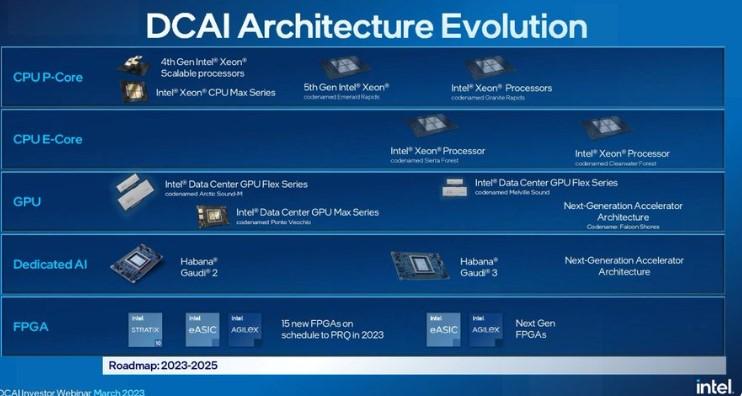

While Intel’s AI prowess took much of the day’s attention, Rivera also posed with a 5th Gen Intel Xeon Scalable processor, codenamed Emerald Rapids. It is Intel’s next Performance-core product promised for fourth quarter launch. It is already sampling to customers and volume validation is underway. Rivera also laid out other products in its annual roadmap update.

Intel also showed off the 4th Gen Xeon, already shipping, which is now being deployed by the top 10 global cloud service providers. A demo with a 48-core 4th Gen Xeon head-to-head with a 48-core 4th Gen AMD Epyc CPU showed a 4X average performan gain on deep learning workloads.

In addition, Intel said its Sierra Forest will ship first half of 2024 and is on schedule with first samples already out the door. It is based on the Intel 3 process and boards 144 cores per socket.

Granite Rapids will follow Sierra Forest in 2024, though no specific launch date has been disclosed. It is also sampling to customers. It has the fastest memory interface of any chip, Intel said.

Clearwater Forest will follow Sierra Forest in 2025 and will be made on the Intel 18a node, culminating the company’s five nodes in four years strategy.

While Intel claims it is on target with five nodes in four years, the schedule is subject to some criticism, and even ridicule. On Twitter, a tweet by @witeken noted that “Intel is now two years into the ‘5 nodes in 4 years’ roadmap…and still has only shipped 1 node, leaving 4 nodes in 1.5 year.”

Dylan Patel, chief analyst at SemiAnalysis, summed up some of the skepticism this way in comments to Fierce Electronics: “The Intel roadmap would help them be competitive with AMD across the board in 2025 if they execute on time, but that’s the real question. Intel needs to execute because no one believes their roadmaps.”

Skepticism aside, the market liked Intel’s performance, with the stock closing up by more than 7% on Wednesday to $31.52 a share.

RELATED: Update: ‘Huge’ GPU supply shortage due to AI needs, analyst Dylan Patel says