Intel’s momentus “AI Everywhere” campaign to show it is a strong competitor in the AI chip arena moved into high gear Thursday. CEO Pat Gelsinger and top executives argued the company can help customers with AI training and inference jobs across huge data centers as well as aboard AI PCs and even on smaller mobile devices.

The chipmaker’s approach will be helped with the launch of two new chips—Core Ultra for PCs and mobile and Fifth Generation Xeon for servers. Both have been promised for months, but Intel was keen on showing it can meet a rigorous production schedule to please both customers and investors, an area where it has been criticized in the past.

“We’re getting mojo back in the execution machine,” Gelsinger told reporters in a recent briefing. “We have to get back to unquestioned leadership.” He added, “We’re driving the team like crazy to get there”—to next-generation Xeon Granite Rapids and Xeon Sierra Forest chips slated for 2024.

He also confirmed Intel is not building an Arm processor of its own, as some other big players have done, but believes Intel will have a “significant business” as an Arm foundry provider that fabricates Arm devices for other companies. “There’s no reason any customer should use a Graviton instance,” he added, referring to the AWS Graviton processor, which relies on the Arm Neoverse N1 core.

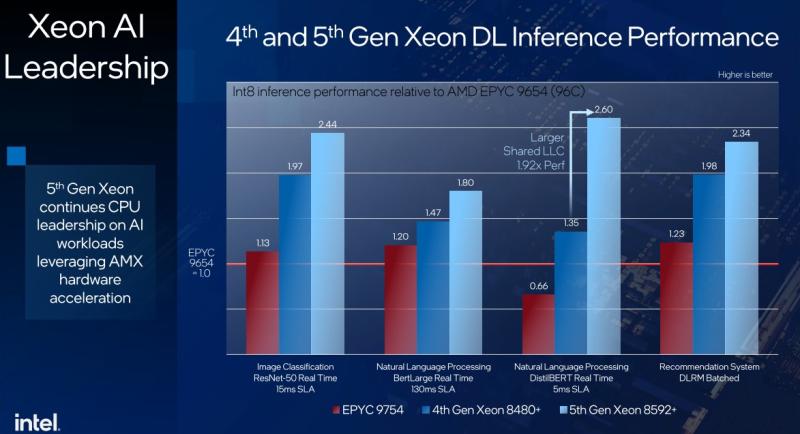

While most of the application merits of AI PCs from various chipmakers are yet to be realized, Intel also briefed reporters on side-by-side performance attributes of its Fifth Generation Xeon Scalable server processors, code-named Emerald Rapids, and compared them head-to-head with what AMD offers, while also asserting the qualitative value of its CPUs next to wildly successful GPUs from Nvidia.

The AI Everywhere theme has been gathering steam for months, going back to the Intel Innovation event in September when Intel’s AI PC concept was laid out, relying on an Intel Core Ultra chip that Intel said will also be used in 2024 in ruggedized form for edge devices in retail, medical and industrial settings.

Intel on Thursday announced 230 of the world’s first AI PC laptop designs powered by Core Ultra from Acer, ASUS, Dell, Dynabook, Gigabyte, HP, Lenovo, LG, Microsoft, MSI and Samsung. Acer announced the Swift in September. Intel said Ultra will offer up to 11% more CPU compute capability than AMD Ryzen chips in an ultrathin PC.

RELATED: Intel to expand AI Meteor Lake chip to edge, beyond the AI PC

There is a possibility that big buyers of servers could wait for 6th Generation Xeon-based servers coming next year in hopes of a greater step-up in performance, but Intel and analysts that cover the company note that millions of customers are still relying on servers running First Generation Xeon.

An upgrade to the latest Xeon will mean a big improvement in Total Cost of Ownership for those customers, Intel told reporters. If customers decide to wait for 6th Gen chips, Sierra Forest with 288 cores is coming in the first half of 2024, Intel said, with Granite Rapids and its improved P-core performance, to “closely follow.” Karl Freund, founder and principal analyst at Cambrian-AI, said it will be a tough call for some data center managers to decide to upgrade to 5th Gen Xeon. “Intel definitely has a strong value proposition to upgrade from Gen 1, Gen 2 and Gen 3, but if you can wait, it will only get better next year [with 6th gen], depending on how Intel prices each generation,” he said.

The real value of AI Everywhere

While “AI Everywhere” might sound like marketing-speak, it is more fundamental, according to executives at Intel. “Our customers are adding computer vision to every workload and now with generative AI the world is more interested” in AI Everywhere, said Bill Pearson, vice president of network and edge at Intel, speaking in an interview.

“Where you place compute is variable,” he added. “It makes perfect sense to put a device at the cloud, but the edge needs processing locally. There are more capabilities across the continuum. You can run a workload on a small or big device and everything in between and use the same software to do that workload with OpenVINO, an engine that’s been working for years.”

Aside from seeing the Core Ultra and Xeon chips as separate processors for separate jobs, Intel has argued there will be benefits to AI Everywhere across platforms with common software. Intel’s software toolkit includes OpenVINO, oneContainer Portal, Intel Neural Compressor, SynapseAI and Developer Cloud. Intel pluggable extensions are available to PyTorch and TensorFlow.

Intel sees Core Ultra on AI PCs as making a big difference in settings with multiple sensors such as in restaurants with multiple points of sale. AI will help in coordinating computers on manufacturing floor as well, Pearson said.

“Common software across all deployments is a really big deal,” argued Jack Gold, an analyst at J. Gold Associates. “You can right-size the hardware for the job that way, without having to do ports. With hybrid cloud systems, being able to deploy remote cloud instances on edge computers is important and they must remain compatible across all instances of the cloud. Yes, AI Everywhere will be an important concept going forward, just as graphics once was a specialty and is now universal.”

Bob O’Donnell, chief analyst at Technalysis Research, said one advantage of Intel’s AI Everywhere concept is to distribute AI jobs across a broader base of computers, not only leaving the processing of generative AI jobs up to data centers or cloud servers. “Generative AI is in such demand, and there aren’t resource to handle it all. Some has to be passed off to PCs,” he said. With common software across platforms, the management of AI could be simplified.

AMD was first with Ryzen series chips for AI acceleration with laptops, O’Donnell noted, but Intel is still the largest chipmaker in the PC market, which is where the AI PC market could thrive after a couple years of declining global sales. Core Ultra is a “big deal because when Intel does something, it drives the need,” he said. “Intel is working with Microsoft and major OEMs to make it happen.”

Gold added, “AI today is pretty limited at the edge and on the PC but that won’t be the case much longer” with Microsoft working to bring AI into its OS. AI is making inroads in video processing for security and other applications, for instance. “Hybrid Cloud means that edge compute will have more and more AI functionality, not always high-end machine learning which needs high performance accelerators, but increasingly running inference which can be handled by many CPUs, especially if they include an NPU (neural processing unit) subsystem acceleration. Even Arm based systems will be running AI inference workloads and doing some limited training and machine learning…

“The AI Everywhere message is not just marketing hype,” Gold added. “It’s the way the technology is evolving. It may take one to two years for it to reach some critical mass.”

Intel said the industry is expanding AI to the PC and edge to reduce the need for access to the cloud, which can be unreliable or slow and expensive in terms of energy and transit costs. Also, data sovereignty “dictates the need for AI built specifically for edge that harmoniously integrates with cloud,” Intel said in a presentation slide. Citing Gartner, Intel said more than 50% of enterprise managed data will be created and processed outside the data center or cloud by 2025.

Energy savings

Intel also has made energy savings a centerpiece of its new Xeon chips, arguing that even as performance has improved in the latest generation, less electricity will be required than in the prior generations. The energy benchmarks will impress some customers, but price and performance are likely to still be the top motivators for buyers, according to analysts and buyers. More importantly, some companies are in a rush to move to AI, with less regard for costs—at least to start.

“We are just in the early innings of a long game with AI,” said Freund, at Cambrian-AI. “Companies are just getting on board with deploying AI to improve productivity, deliver better service and lower costs. The attitude now is ‘get it working, then we can worry about lower energy and other costs.’”

No question AI workloads cause a significant increase in data center energy consumption, Freund said, but that legitimate cause for concern is “often overshadowed by the business imperatives of getting AI up and running as soon as possible with enough performance to meet business needs. Only once you check off those boxes, does efficiency come into play.

“Energy consumption is just not a top driver and I don’t see it as a top-line consideration for most companies and cloud service providers in the short term. Big GPUs from the three major players (Nvidia, Intel and AMD) do consume more energy per rack, but they also deliver more performance. Efficiency can actually go up when you use, say, an Nvidia H200 over an older GPU for a given workload. Also, software advances for AI will drive down both capital and operating costs.”

Gold added: “It’s true that each chip is using less and less energy, so on a comparable basis they are being more efficient. However, many systems are scaling upwards to reach higher levels of compute necessary for some of the modern workloads. This means more chips and more power, but lower power chips are still saving energy compared to what the systems would have needed with older generation chips so it is a win, even as systems get bigger.”

During briefings with reporters, Intel said its Intel Core Ultra 7 165H processor showed a 25% reduction in power consumption over the Intel Core i7. The Ultra device consumed about 1150 mW in a Netflix video playback with LP E-cores in an SoC tile.

In a comparison with AMD Ryzen 7, the Intel Core Ultra 7 showed a range of lower power performance, from 7% less for web browsing and up to 79% less for a Windows desktop idle.

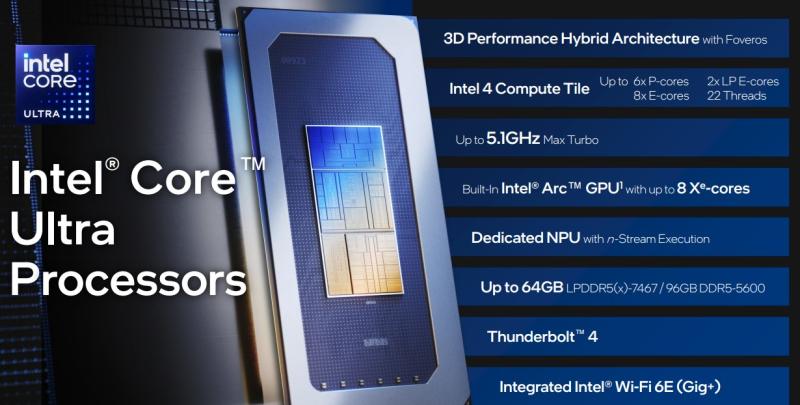

Ultra functions with improved e-core and a p-core performance. P-cores offer more power when needed and are physically larger, while e-cores are intended for efficiency.

Core Ultra pointers

To the important question of what an AI PC is good for, Intel noted that “the AI PC experience is only as strong as the enabled software.” To that end, Intel said more than 100 independent software vendors and 300 AI-accelerated features will be optimized for Intel Core Ultra, which is three times more AI apps and frameworks than any silicon competitor.

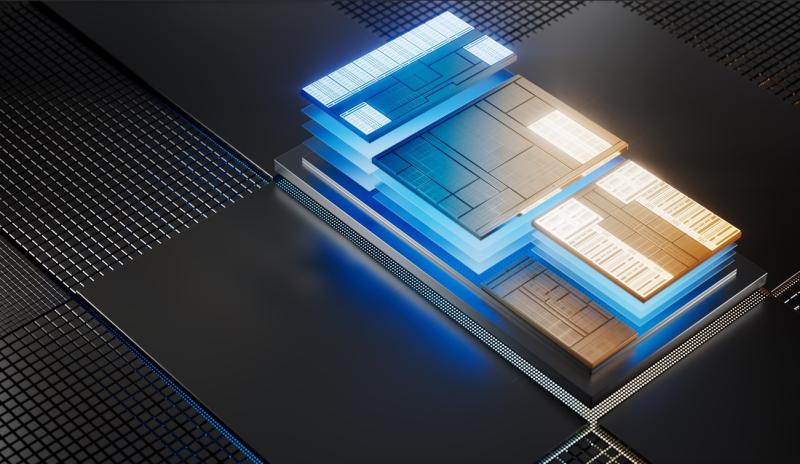

Core Ultra uses Intel 4 process technology with Foveros 3D advanced packaging. It has a built-in Arc GPU with eight X-cores and up to double the graphics performance of the previous generation. It has up to 16 cores (six P-cores and eight E-cores and two LP E-cores, with up to 5.1 GHz maximum turbo frequency and up to 64 GB LP5/x and up to 96 GB DDR5 maximum memory capacity.

It also has OpenVINO toolkits support with minimal code changes and connection speeds of 40 Gbps.

5th Gen Xeon pointers

The 5th Gen Xeon processor supports up to 64 cores per CPU and almost 3 times the cache of the prior generation. It offers eight channels of DDR5 per CPU. Some 5th Gen Xeon processor instances will also support CXL Type 3 workflows from leading cloud service providers.

The 5th Gen processors are pin compatible with the previous generation and companies such as Cisco, Dell, HPE IEIT Systems, Lenovo, SuperMicro Computer will offer single and dual socket systems starting in first quarter 2024, Intel said. Major CSPs are announcing 5th Gen Xeon instances throughout 2024.

Intel Gaudi2 AI accelerators vs. Nvidia GPUs

Even as Intel announced the importance of 5th Gen Xeon and Core Ultra in the AI landscape on Thursday, Nvidia has dominated the market for AI work with its GPUs. Fierce asked Intel to comment on how it expects to compete in that arena and received the following response from a spokesperson:

“Intel is focused on bringing AI everywhere and supporting an open AI software ecosystem to break down proprietary walled gardens and lower the barrier to entry that's essential to unlocking AI innovations for developers and customers at scale. We view AI as a rising tide for overall data center compute.

“Our Intel Gaudi2 is the only viable alternative in the market for LLM AI training and inference computing needs today, showing competitive performance with the latest MLPerf data compared to NVIDIA H100. A few notable points from the latest MLPerf results from November:

-- Gaudi2 demonstrated a 2x performance leap with the implementation of the FP8 data type on the v3.1 training GPT-3 benchmark, reducing time-to-train by more than half compared to the June MLPerf benchmark, completing the training in 153.58 minutes on 384 Intel Gaudi2 accelerators. The Gaudi2 accelerator supports FP8 in both E5M2 and E4M3 formats, with the option of delayed scaling when necessary.

-- While NVIDIA’s H100 outperformed Gaudi2 on GPT-3 and Stable Diffusion, Gaudi2 performance on ResNet was near parity against the H100.

-- This latest testing shows Intel’s Gaudi2 accelerators are closing the performance gap to NVIDIA’s leading GPUs."

Gelsinger accentuated the value of the Gaudi accelerator family by appearing on stage and holding up for the first time the next generation Gaudi3 accelerator, which is arriving in 2024. Intel has boasted the Gaudi family will capture a larger portion of the accelerator market with competitive pricing.