Driver distraction is recognized as one of the main contributors to traffic accidents. National Highway Traffic Safety Administration (NHTSA) research has shown that 10% of drivers under the age of 20 who were involved in fatal car crashes had been in some way distracted at the time of the accident. Perhaps even more alarming is the estimate by the National Occupant Protection Use Survey (NOPUS) that at any point of time during daylight hours, approximately 660,000 people in the US are using cell phones or operating some form of electronic equipment while they are driving.

As the functionality that is built into modern cars continues to expand and the time that we spend during the course of our daily lives using portable devices (such as cell phones) lengthens, the potential sources of distraction are only likely to increase. On top of calling and texting people or manipulating the vehicle's infotainment, climate control or navigation systems, drivers may be engaged in conversations with passengers, eating or drinking, dealing with disruptive children, amongst other distracting activities.

Elevated degrees of driver monitoring could, moving forwards, help to combat this serious problem. The inclusion of advanced driver assistance system (ADAS) technology in modern vehicles is allowing automatic emergency braking (AEB) and collision avoidance mechanisms to safeguard road users. It is, however, vital that these are only used when it is totally appropriate.

In circumstances where the driver is mentally aware and bio-mechanically able to respond when a hazardous situation arises, then an overly abrupt intervention by the vehicle's ADAS can prove at the very least irritating and possibly, even worse, could actually be dangerous. Conversely, should the ADAS not be responsive enough, then its effectiveness will be negated.

If the ADAS can fully ascertain the driver's current state, it can more intelligently judge whether or not it will need to override the driver in order to react to the situation as it occurs, such as an imminent collision. To do so, the vehicle's ADAS needs to receive accurate, highly detailed data on what the driver is doing at that moment, so that a decision can be made about whether they are capable of reacting in time.

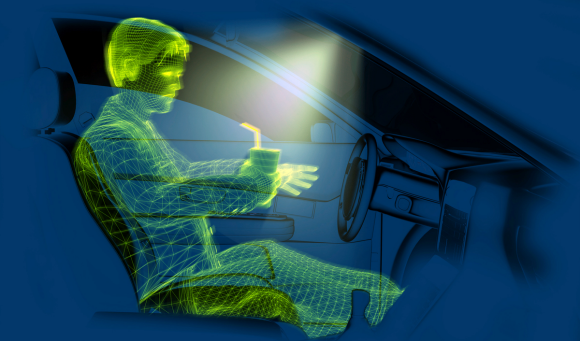

It is clear that monitoring of the driver's position and their movements in real-time is called for, with the acquired information made available to the ADAS. Access to 3D imaging data will in principle allow to determine key parameters, such as the driver's body positioning, which direction their head is pointed towards and where their hands are placed relative to the steering wheel. From this it will be possible for the vehicle's ADAS to determine where the driver's attention is and whether they can take action in a timely manner if necessary.

The 3D information can be used to estimate the bio-mechanical reaction time of the driver to fully re-engage, to compare it with the event horizon that has been calculated. If the driver is not sufficiently engaged to react quickly enough, the ADAS is aware that, should a potentially dangerous situation arise, it may be required to step in autonomously. It is of course paramount that the swapping between manual operation and autonomous operation happens seamlessly.

Automotive engineering teams have faced major challenges when looking to make use of 3D imaging based on the conventional optoelectronic technology that currently exists. The variations in ambient light conditions present one of the biggest of these challenges to overcome.

During a car journey the light levels can change substantially, depending upon whether the car is in sunlight, under cloud cover, inside a tunnel, beneath foliage, etc. The light variation throughout the cabin must also be factored in. One part of the cabin might be bathed in light, while another might be in shadow. In addition, the supporting electronics need to be rugged enough to cope with the uncompromising operational surroundings that characterize automotive applications. Functional safety elements must be incorporated into the design as well - there is no room for latency in such systems.

Finally, integration of the imaging system into the vehicle is likely to be somewhat problematic too. Optical sensor implementations are generally very specific to the vehicle - with any diversity in the size and shape of the cabin, or the orientation of the seating/main console, impinging on the operational effectiveness of sensing system. This means that car manufacturers cannot benefit from the economies of scale that a platform approach applied across their entire range would offer.

Next page

Time of Flight Comes of Age

There are now opportunities for optical Time-of-Flight (ToF) to be used in an automotive context; supplanting conventional optoelectronic sensing solutions. Though until now it has been mainly restricted to the consumer electronics/gaming sphere, the emergence of more robust technology, which can deal with greater variation in ambient light conditions, means that its current scope is being expanded far beyond this today.

Optical ToF basically works as follows. An infra-red (IR) light source emits a wide angle beam. When this beam comes into contact with obstacles it is reflected back towards the ToF sensor. This sensor detects the reflected IR signal and compares it against a reference signal. By establishing what the phase shift is between these two signals, it is possible to calculate the distance between the sensor/source and the obstacle that the IR beam has been reflected off.

ToF technology uses an infrared light source to detect changes of position and

motion inside the vehicle.

Expansion of Automotive Applications for ToF

Initial implementation of optical ToF technology within automotive environments has concentrated primarily on human machine interface (HMI) tasks. Car models are, after passing through lengthy development cycles, starting to come to market that employ ToF for gesture recognition purposes. Through such mechanisms an array of different in-cabin operations can be undertaken - like accepting/rejecting calls, adjusting the climate control system, changing the volume setting of the music system, accessing the navigation system - without the driver having to look at the console, and thereby avoiding loss of concentration on the road ahead.

Although this is already a major step forward, there is potential for much more ambitious things to be accomplished. Through raising the level of sensitivity and broadening the field-of-view, ToF imaging apparatus will be able to map the driver's head and upper body in 3D.

Furthermore, it will allow data to be captured regarding where other vehicle occupants are located and their size to be estimated. For example, discerning between a child and an adult is of great importance when deploying air bags to ensure that excessive force is not applied.

Via the deployment of advanced ToF technology, the heightened operational performance needed to enable robust in-cabin gesture, driver and occupant sensing is now becoming available. It is only a matter of time before these features are not only commonplace in luxury car models, but we'll start to see implementation in mid-range and possibly even economy vehicles.

About the Author

Gaetan Koers is a Product Manager for Optical Sensors at Melexis. He graduated at the Vrije Universiteit Brussel in 2000 with an engineering degree in Telecommunications. In September 2000 he joined the Lab for Micro- and Photonelectronics (LAMI) with a GOA doctoral research position. He obtained his Ph.D. title in 2006 with a dissertation on system-level analysis and design of noise suppression techniques in active millimeter-wave (75 to 220 GHz) focal-plane imaging systems. Since 2007, he has been working at Melexis Technologies NV in the OPTO business unit, in project management and application engineering functions focused on CMOS image sensors and Time-of-Flight sensor development. Since 2012, he is responsible for Melexis' 3D optical sensor product line.

Related Stories

Kostal and Infineon Equip Car with 6th Sense for Increased Traffic Safety

Revolutionize the VR World. In 24hrs.

Endress+Hauser Announces Spring 2016 Training Course on Time of Flight (ToF) technologies