The race to bring highly automated vehicles (HAVs, or robocars) to the masses is gaining momentum, as well as plenty of capital. One of the most recent developments is Ford's billion-dollar investment in Argo AI, a start-up specializing in artificial intelligence, robotics and self-driving cars. Not long ago, GM took on a similar venture with the purchase of Cruise Automation. These are just two examples out of many, highlighting the amount of cash flowing to the advancement and honing of intelligent car systems.

The race is on for automated-vehicle development.

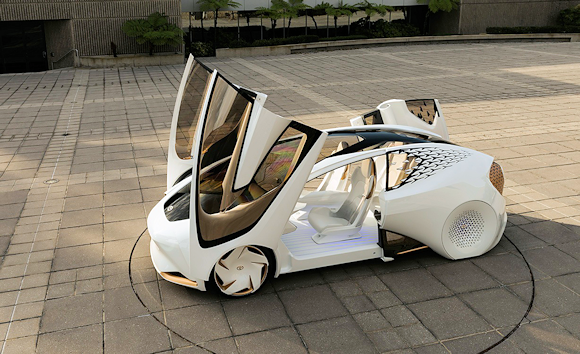

From Toyota's perspective, you will soon be able to have a meaningful relationship with your car. At CES 2017 the Japanese car company unveiled the Concept-i, a futuristically designed car to give us a "glimpse into a future mobility that is warm, friendly and revolves around you". The vehicle's AI persona, called Yui, is designed to improve safety using light, sound and even touch to communicate critical information. It's an intriguing statement, but until it comes to fruition, there are many obstacles to improving the intelligence of HAVs.

Toyota's take on the car of the future: The Concept-i.

Next page

Deterministic Algorithms And Deep Learning: A Dual Track To Smart Cars

Computer systems are great at performing tasks with rigid rules. As the rules get fuzzier, it becomes harder for a computer to know what to do. For example, a traffic light gives precise instructions when to stop and when to go, but merging into moving traffic is a lot trickier, because it's not clear-cut. Knowing how to make these complex decisions is defined as driving policy, and it is where humans excel above machines.

The straight-forward rules of the road can be handled by deterministic vision algorithms like histogram of oriented gradients (HoG). Mastering the skills of driving policy, on the other hand, requires deep learning, using convolutional neural networks (CNNs) to mimic the complex decision-making of the brain. These two tracks are advancing in parallel, and are both extremely important to the current implementations of HAVs.

Designing An Intelligent Vision Solution And The Quest For The Mobileye Killer

One of the challenges to getting super-smart cars is designing the right system-on-chip (SoC). There are different approaches to designing "the brain" of an HAV. I recently wrote a post about choosing between a centralized or edge approach. But either way, the system must be extremely powerful to handle the CNN processing and get quick results, while being extremely efficient at the same time. Cost is also a big issue because multiple vision sensors are required for the HAV to "see" in all directions. A solution that is too expensive will always be a deal-breaker.

Currently in the ADAS sphere, Mobileye is considered the leading solution provider, by a significant margin. According to EETimes.com's account of the race to offer the Mobileye killer, companies ranging from MediaTek and Renesas to NXP and Ambarella are working on alternatives to Mobileye's EyeQ chips. Per the article, the SoC designers will require expertise in CNNs, real time video analytics, as well as best-in-class digital technology node, and have access to the best image processing IPs.

Crowdsourcing And Information Sharing: Is It Too Soon?

Another important step towards enhancing the intelligence of vehicles is related to infrastructure. "HD Mapping" and "Connectivity" (V2V, V2I) are methods that can highly increase the HAV's ability to predict the road situations ahead, and respond quickly and safely.

By creating real-time, crowdsourced maps of the current road status, all the vehicles can benefit. But, the use of these technologies requires establishing a consensus in the automotive industry and building a common infrastructure. In this heated, cutthroat race, it's hard to imagine automakers collaborating on such an endeavor.

About the Author

Jeff VanWashenova is the Director of Automotive Market Segment, CEVA. He holds a B.Sc. in Electrical Engineering and a Masters of Business Administration from Michigan State University.

Related Stories

ON Semiconductor will leverage CEVA vision IP for advanced driver assistance systems

The Ultimate Deep Learning & Artificial Intelligence