Convolutional Neuronal Networks

The machine-learning algorithm Convolutional Neuronal Network (CNN) copies the way the human brain processes images. With CNN, the system learns to recognize apples or cancer signs by processing thousands of pictures. Invented in the end of 1980s, CNNs are coming into bloom just now, when hardware and software have the necessary features and big image libraries are established. For example, CNNs are better than humans in recognizing license plates or dog breeds. Once trained, these CNNs can shrink to a few megabytes and become highly useable in embedded applications.

CNN Application

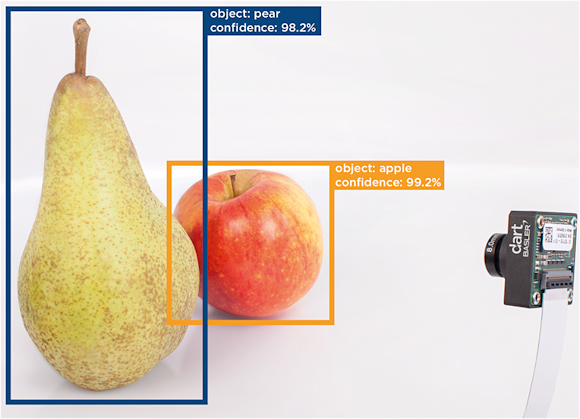

Let us say you need a vision system to separate apples from pears or salmon from trout on a conveyor belt. In the traditional approach, you would need to manually define the criteria like shape, color, size etc., which the system would use to do the sorting (see figure 1). While the distinction between an apple and a pear is quite easy to describe, determining cancer abnormalities on a histopathological slide is not that simple. The more complex the classification, the longer it takes to design these so-called handcrafted features.

Fig. 1: A trained system can locate the object and determine its nature with a high accuracy. Here the camera looks at an apple and a pear.

An even bigger disadvantage is that this solution is designed only for the one specific classification problem and is not flexible. If you need to separate skipjack tuna from bluefin tuna, you'll need to start the process all over again.

Here machine-learning algorithms, most significantly CNNs, change the game. CNNs model the human brain in processing images and identifying objects. Processing thousands of pre-classified images of say pears and apples, CNN allows the system to automatically determine and learn the classification features. You don't have to define the criteria, the system finds the crucial features autonomously.

The CNN model is based on the research of the visual cortex, the part of the brain that processes images. In 1968, the Canadian neurobiologist David Hubel and the Swedish neurophysiologist Torsten Wiesel published their research on the structure of the visual cortex.

The perception network of the cortex consists of several layers of cells. In the front, there are the so-called simple cells that are activated by simple structures like straight lines at a specific angle. At the same time, cells that are located in the back layer respond to complex patterns like pictures of eyes, for example. Neighboring cells have similar and overlapping receptive fields and so a complete map of visual space is formed.

In 1989, these findings inspired Yan leCun, a French-born computer scientist, to develop the Convolutional Neural Networks machine-learning algorithm. In CNNs, the images are transmitted through a series of layers and undergo different mathematical operations including convolution. Like in the visual cortex, the network determines simple (low-level) and complex (high-level) features. Eventually the network determines what is in the image based on previously learned patterns. If the network has more than three layers, it is considered deep and the process is called "deep learning".

The training process would go like this. A big set of images is presented to the network one at a time. The network determines what is pictured and then sees the correct answer. If the initial answer was wrong, CNN automatically adjusts the classification parameters. The more images the network is trained with, the more accurate it can perform the classification.

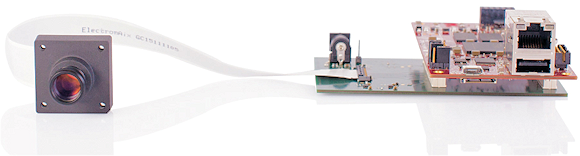

Fig. 2: After the system is trained, it can work with limited resources like here in an embedded setting.

Network Training

Training of the network is a very computation-intensive process and until recently, the CNN could not be used for image processing, because there was no hardware that could handle the process. Conventional central processing units (CPUs) process data serially, that is one operation at a time. Because training of a CNN requires lots of data and a tremendous amount of processing, it would take ages to train it. Usually the developer needs to change the parameters several times to find the right optimizations settings – and run everything again.

With the development of Graphical Processing Units (GPUs), data can be processed in parallel, significantly reducing the training time down to several days. Initially engineered for processing real-time high-resolution 3D graphics, GPUs evolved into multi-core systems manipulating large blocks of data in parallel.

To give developers access to the GPU's virtual instruction set and parallel computational elements, NVIDIA, a leading provider of graphic chips, introduced the software layer CUDA. This allows using the GPU for general-purpose processing like deep learning.

Open Source & FPGAs

Today, there are several open-source software libraries that can be used by any developer of a machine learning system free of charge; Caffe, Torch, Theano, to name the most prominent ones. In November 2015, Google released their machine learning software TensorFlow, which is also open-source and is used in various Google Products such as Gmail and Google Photos.

Another way to process data in parallel is to use Field-Programmable Gate Arrays (FPGAs). FPGAs have a variety of advantages: efficient digital signal processor (DSP) resources and extensive on-chip memory and bandwidth. Convolutions and other mathematical operations can be performed very fast. In fact, a recently published paper from Microsoft Research stated that using FPGAs could be ten times more power efficient compared to GPUs.

FPGAs allow building systems with limited resources, which at the same time can perform mathematical operations fast. In addition, after the network has been trained, it stays small and does not need as much memory as during the training.

The small size of the network and its high performance allow using CNNs in embedded vision applications, like driver assistance. An advantage for embedded applications is direct access of the camera to the FPGA, as for example in the Basler dart cameras with a BCON interface. This prevents protocol overhead and leads to more efficient implementation.

Training Challenges

Another challenging task in developing CNNs is acquiring imaging data for their training. You need an extensive amount of images for certain kinds of objects, for example, apples or trout. Here one can use freely accessible databases with pre-classified pictures.

For example, ImageNet hosts over 14 million classified images. There are also highly specialized databases like the German Traffic Sign Recognition Benchmark with more than 50,000 images of German traffic signs. For the latter, CNNs reached a better recognition rate (99.46%) than humans (98.84%) in 2012. Having access to hundreds of millions of classified images, Google and Facebook and other big companies can create high-class CNN's with high recognition rates.

CNNs can be applied in autonomous vehicles, medical devices and in classical machine vision applications. A CNN does not get tired or distracted and can efficiently search for tiniest abnormalities in huge images (for example, searching a histopathological slice for signs of breast cancer). In many applications, like facial recognition, CNNs contribute to a decrease in error rate.

Bottom Line

According to the Embedded Vision Developer Survey from the Embedded Vision Alliance conducted in July 2016, 77% of surveyed developers were using or planning to use neural networks to perform computer vision functions in a product or service. That's up from 61% in the previous survey conducted in November 2015. Then 86% of developers surveyed in July and using neural networks were using CNNs as neural network topology.

This clearly shows that CNNs are gaining popularity in the industry. Today the available software and hardware allows applying this technology even by mid-size companies with limited resources.

About the Author

Peter Behringer is Product Market Manager at Basler and specializes in embedded cameras. While obtaining his Masters of Science in Medical Engineering from the University of Lübeck, he worked as a research trainee for the Charité Berlin, a prestigious teaching hospital in Berlin. Later, being a research trainee in the Harvard Medical School, Peter developed software to support in-bore MRI-guided targeted prostate biopsy. He also published several academic papers on image processing.

Contact

Peter Behringer – Product Market Manager

Tel. +49 4102 463 662

Fax +49 4102 463 46662

E-Mail: [email protected]

Basler AG

An der Strusbek 60-62

22926 Ahrensburg

Germany

Related Stories

Choosing The Best Vision System For Highly Automated Vehicles

Frame Grabber Market Size, Share, Trends and Growth in Global and Chinese Industry 2016 - 2021

PathPartner Technology Announces Launch of Machine Vision Camera Module