The latest advances and quality improvements in MEMS-based sensor technology have led to low cost, small size, low power and high performance devices that are perfectly suitable for applications that were not feasible just a few years ago. These high performance sensors are being used at an accelerating rate in many smartphones and other portable and wearable devices.

Application developers are using currently existing sensor ecosystems to develop complex and accurate applications including immersive Virtual Reality (VR), Augmented Reality, Pedestrian Dead Reckoning (PDR), EIS / OIS (Electronic Image Stabilization / Optical Image Stabilization) and complex gesture recognition, just to name a few. In particular, because of ultra-low noise density, high resolution, and high stability over time, these MEMS-based inertial sensors (accelerometers and gyroscopes) are currently being used in applications to deliver smooth interaction and harmonious user experience.

In this article, we focus on gesture recognition as part of human computer interaction (HCI) and provide an overview of hardware and software implementation of gesture recognition and its applications. A 2-D mouse cursor trajectory method based on an Inertial Measurement Unit (IMU), which encompasses a 3-axis accelerometer and a 3-axis gyroscope, is proposed. It is illustrated how an IMU is used for complex gesture recognition to improve the efficiency and accuracy and make the gesture recognition intuitive in real time. We also describe different gesture recognition algorithms.

Introduction

The use of gesture recognition in HCI is not a new topic. Some of the recent TVs and game stations have camera based gesture recognition without using a remote control or a game console. It uses image-processing technology to recognize a user's hand gestures. The accuracy of gesture recognition depends on the camera resolution and calibration, the light level of the environment, camera view angles, update rate, and the sensitivity of the camera to capture fast motion.

Using gesture recognition as an HCI user interface entails analyzing a user's body motion (especially hand gesture) to trigger specific and well-defined functions in a mobile device. For example, a single tap action can be used in a smartphone to answer a phone call and double tap to end the call. The tilting of the phone upwards or downwards from the horizontal position can be used to scroll up or down the address book at a comfortable speed. A single tap can be used to select a person's information and flipping the phone to left/down and back can be used to return to the previous address book.

Similar to speech recognition, the motion-based gesture recognition compares the captured motion with predefined gesture patterns stored in the database to see whether or not the new gesture is recognized. Therefore, the accuracy of the gesture recognition depends on the success rate of the registered gestures and the rejection rate of any other non-registered gestures. The response time is also important for gesture recognition.

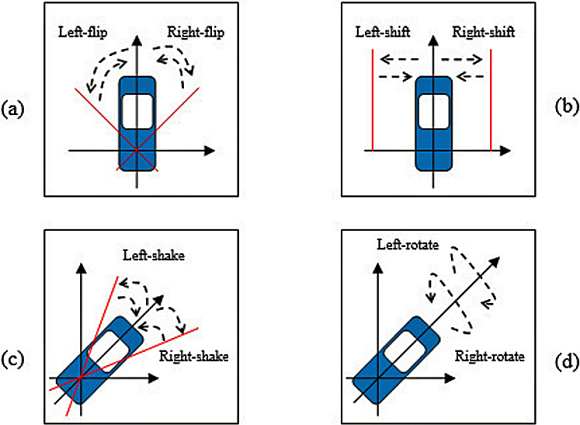

Human hand gestures can be categorized into two groups. First is simple gestures, as shown in figure 1, i.e., single tap, double tap, and tilting/rotating motions in a handheld device.

Fig. 1: Examples of simple gestures

Figure 1-a and 1-b show examples of simple gestures when the start position of the handheld device is vertical. Left-flip gesture means rotating the device to the left about a certain angle and then rotating it back to the original position. Left-shift means moving the device to the left a certain distance and then moving it back to the original position with linear acceleration. A similar meaning applies to the right direction gestures.

Figure 1-c and 1-d show examples of simple gestures when the start position of the device is horizontal. Left-shake means rotating the device around its vertical axis to the left about a certain angle and then rotating it back to the original position. Left-rotate means rotating the device around its longitudinal axis to the left down about a certain angle and then rotating it back to the original position. A similar meaning applies to the right direction gestures.

These simple gestures can be implemented using only a 3-axis accelerometer.

Next page

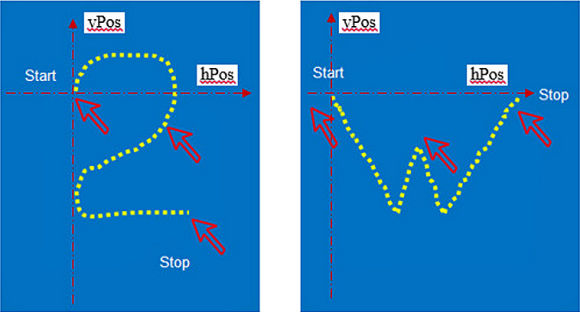

The second type is complex gestures, as shown in figure 2. These include letters, digits and other shapes, and even signatures to unlock the hand-held device or speed dial numbers.

Fig. 2: Examples of complex gestures

Complex gestures can be implemented using a 3-axis accelerometer and a 3-axis gyroscope, which is also called a 6-DOF IMU (6 Degrees of Freedom inertial measurement unit).

As one possible solution, the LSM6DS3 IMU series from STMicro are suitable for advanced complex gesture recognition. These inertial modules feature significant current consumption reduction, ultra-low noise density, high output data rate and a number of embedded applications to free the system micro-controller for more complex tasks.

The current IMU module has been integrated into a single chip. The accelerometer and gyroscope raw data are available through the SPI or I2C interface. The sampling rate for sensor data acquisition can be set, for example, to 50Hz or 100Hz. The full-scale ranges can be set to ±4g for the accelerometer and ±2000dps (degree per second) for the gyroscope to avoid sensor data saturation for fast speed gestures.

Any of these IMUs can be used to implement complex gesture algorithms. The following sections discuss the hardware and software implementation of complex gestures.

Two-Dimensional Mouse Cursor Trajectory Method

In the movie Harry Potter, certain hand gestures with a wand are used to perform feats of magic. If the gesture is recognized, a predefined event will then be triggered. This kind of gesture is considered complex. As always, simple and complex gestures can be projected onto a 2-D screen to show the gesture trajectory in real time like a mouse cursor moving on the screen.

A single 3-axis accelerometer can also be used for complex gesture recognition to some extent. However, the accelerometer cannot distinguish the linear acceleration from the earth gravity vector. In addition, the accelerometer cannot detect the angular rotation of the handheld device around the vertical axis. Therefore, it is difficult for a single accelerometer to distinguish the gesture pattern for digit "2" from letter "Z", and the gesture pattern for digit "5" from letter "S".

For this reason, the cursor control using a single accelerometer in an air mouse application is not as smooth and predictable as using both an accelerometer and a gyroscope (6-DoF IMU).

It is important to note that the sensors do not need to be calibrated for gesture recognition accuracy because the gesture motion is relative to the start point of a gesture.

As mentioned before, gesture recognition needs to capture a set of sensor raw data from a motion gesture and then compare it with a predefined template by running the recognition algorithm to see whether or not the gesture is recognized.

If the input to the gesture recognition algorithm based on microcontroller sampling rate is six columns of sensors raw data (3 axes of accelerometer data and 3 axes of gyroscope data), the algorithm will then take longer time to output the result.

Therefore, the 2-D mouse cursor trajectory method can be used to fuse the raw data of the accelerometer and gyroscope to obtain two columns of time series data (hPos and vPos as shown in figure 2) and then feed them into the recognition algorithm. This can improve the recognition algorithm efficiency and accuracy, and the user can then easily see on a screen how her/his gestures are being processed in real time.

In addition, independent of the position of the handheld device at the starting point, the 2-D mouse cursor trajectory method can always plot the gesture on the screen of a PC or a smart phone in real time as long as its longitudinal axis is pointing forward. Therefore, this method can be generalized to be user independent.

Next page

The following procedure shows three steps for complex gesture recognition using a 2-D mouse cursor trajectory method.

Step 1: Detecting the start and stop time point of a gesture.

The easiest way is to press and hold a button on a handheld device to indicate the start time point of a gesture and to release the button to indicate the end of the gesture.

Another way is to hold the handheld device stationary for a very short time before the start of the gesture. During this time, the accelerometer and gyroscope data on X, Y and Z axes will show six straight lines. The microcontroller will then start to update the sensors' raw data at this start time point. After the gesture is finished, the user can hold the device stationary again for a very short time. The microcontroller then recognizes that this is the stop time point of the gesture.

Step 2: Obtaining hPos and vPos data of the mouse cursor trajectory.

When a gesture is being performed in 3-D space, it is a good idea to display the cursor trajectory on a 2-D screen. This will help the user see immediately if the mouse cursor trajectory looks similar to the gesture that is performed multiple times to train the gesture recognition algorithm or if undesirable gestures should be rejected.

A quaternion based complementary filter or Kalman filter sensor fusion algorithm can be developed by using the raw data from the accelerometer and gyroscope to obtain the dynamic pitch, roll and yaw Euler's angles. Then hPos and vPos for the cursor coordinates on the screen can be obtained as

As per the equation above, C11 to C23 are constant parameters for scaling and speed of the cursor movement.

Step 3: Generating gesture recognition results.

At the start point of a gesture when the handheld device is stationary, hPos and vPos can be manually set to the center of the screen. The cursor will then show the real time trajectory of the gesture as shown in Figure 3.

Fig. 3: 2-D cursor trajectory of gesture in real time

At the stop point of the gesture, the cursor can be anywhere on the screen. The gesture recognition algorithm will then process the newly obtained [hPos vPos] sequence and compare it against the registered [hPos vPos] array templates in the database to see if the gesture is recognized or not.

Next page

Gesture Recognition Algorithms

Since the evolution of MEMS sensors, some companies have been using inertial sensors to provide advanced software solutions that transform sensor data into contextual information for use in sensor-enabled products. These solutions include combinations of sensor fusion, gesture recognition, context aware state detection, and system software to enable use across many consumer electronics products. Devices that use this technology today include virtual reality headsets, augmented reality glasses, smart watches and fitness bands, TV remote controls and game controllers, smartphones, and robots [7].

There are many gesture recognition algorithms available today. The following two sections provide a general and high-level overview of the two most popular algorithms known as Dynamic Time Warping (DTW) and Hidden Markov Models (HMMs). Both DTW and HMM have software development kits (SDK) available for users to speed up gesture recognition development.

The registered gestures in the database mean that the [hPos vPos] data array for each gesture has been trained and characterized as a template of the user on the hand-held device. If another person is using the same device, his gestures may need to be trained again for recognition.

Each gesture template in the database has the same structure of [hPos vPos] data array. Both DTW and HMMs can enable users to create their own customized gestures in the database at any time.

Dynamic Time Warping Algorithm

The DTW algorithm was widely used for speech recognition during 1970s. Currently, it has been adopted in other areas such as gesture recognition, data mining, time series clustering, and image pattern matching, to name a few.

The DTW algorithm is used to determine the similarity between two sets of time series data, which may have different lengths of data samples in terms time or speed. For a gesture recognition application, one data set is the gesture template [hPos vPos] in the database and the other is newly captured gesture [hPos_new vPos_new] time sequence data. The DTW algorithm can be applied to map these two motion data sets in the time dimension in order to determine their similarities and to minimize nonlinear distortion. If they do not match, the next template in the database will then be loaded for comparison. This process can continue until all templates have been loaded.

The output of the DTW algorithm is the distance matrix between the inputs of two sequences. This is also called distance function or the cost function. If the distance value is small, then it means the two sequences match well. If it is a large value, then the newly captured gesture is very different from the registered template.

The DTW is simple to implement and effective for a small number of gesture templates. However, it does not have a generalized way to obtain an averaged template for one gesture from a large amount of training samples. Therefore, the DTW may need to have multiple templates for the same gesture if it is to be user independent.

3.2 Hidden Markov Models Algorithm In Smart Phones

The HMM algorithm is a very powerful statistical method of characterizing the observed data sequence based on Bayes rule. A Hidden Markov Model consists of states connected by transitions. It has been used for speech recognition and image pattern recognition as well. The HMM delivers precise gesture recognition performance with higher complexity and computation load.

Based on the 2-D cursor trajectory method, a smartphone application demo can be developed for the HMM algorithm's gesture recognition by fusing the data from the embedded accelerometer and gyroscope to obtain the [hPos vPos] array. The application may have the following steps:

- If the application does not have registered templates saved in the database, then it enters training stage. Otherwise, it goes to recognition stage directly.

- At training stage, the application displays the gesture of each digit or letter or other shape on the screen one by one. It also allows user to define his own gestures, for example his signature.

- Then the user holds the smart phone and performs the same gesture a few times in 3D space following the direction of each gesture template with mouse cursor trajectory displayed on the screen in real time to train the HMM's model.

- After the training process is completed, the application enters recognition stage.

- The user can perform the same pre-trained gestures again to see if they are recognized or not.

- The user can also perform any other non-registered gestures that have not been trained to see if they are rejected or not.

Conclusion

Unlike image based gesture recognition, the 3-D motion gesture recognition in handheld devices is not traceable and will disappear after the motion is completed. In addition, the sensor raw data is not always the same from the same gesture when the gesture is performed at different speeds or if the mobile device is being held at different tilted positions.

The latest improvements in MEMS sensor technology and performance have added new dimensions to the developers' perspectives of using these sensors in applications, which weren't achievable in the past because of previous inadequate sensor performance.

Advanced software solutions are being been developed by incorporating gesture recognition algorithms and the concept of contextual awareness to develop exciting applications for infotainment, gaming, education, and many others.

References

- LSM6DSL datasheet: http://www.st.com/en/mems-and-sensors/lsm6dsl.html

- LSM6DSH datasheet: http://www.st.com/en/mems-and-sensors/lsm6ds3h.html

- LSM6DS3 datasheet: http://www.st.com/en/mems-and-sensors/lsm6ds3.html

- P. Keir, J. Payne, J. Elgoyhen, M. Horner, M. Naef, P. Anderson, "Gesture recognition with Non-referenced Tracking", Proceedings of the 2006 IEEE Symposium on 3D User Interfaces, pp.151-158, March 2006.

- Z. He, L. Jin, L. Zhen, J. Huang, "Gesture recognition based on 3D accelerometer for cell phones interaction", IEEE Asia Pacific Conference on Circuits and Systems, pp.217-220, November 2008.

- J. Esfandyari et al, "Solutions for MEMS sensor fusion", Solid State Technology, July, 2011. http://www.electroiq.com/articles/sst/print/volume-54/issue-7/features/cover-article/solutions-for-mems-sensor-fusion.html

- Dave Karlin, Modern User Experiences Enabled by Motion Technology, SensorCon 2014; http://hillcrestlabs.com/resources/download-materials/download-info/presentation-wearables-devcon-2014-low-power-motion-for-hmds

- Rabiner, L.R, "A tutorial on hidden Markov models and selected applications in speech recognition", Proceedings of the IEEE, pp. 257-286, 1989.

About the Author

Jay Esfandyari holds a master's degree and a Ph.D. in Electrical Engineering from the University of Technology of Vienna, Austria. In addition to more than 60 publications and conference contributions, he has more than 20 years of industry experience in Semiconductor Technology, integrated circuits fabrication processes, MEMS & sensors design and development, sensor networking, product marketing, business development and product strategy. Jay is currently the Director of Global Product Marketing Strategy at STMicroelectronics.

Related Stories

Revolutionize the VR World. In 24hrs.

Inuitive and gestigon bring gesture recognition to embedded virtual reality platforms