The automotive industry is experiencing a seismic shift. The rapid development of technology to power autonomous vehicles is disrupting everything from individual car ownership to the design of cities. While top-tier automakers and tech companies alike are plotting autonomous innovations, a very vital component to full vehicle autonomy is the development of sensors that enable the car to “see” the world around them and react better than human drivers.

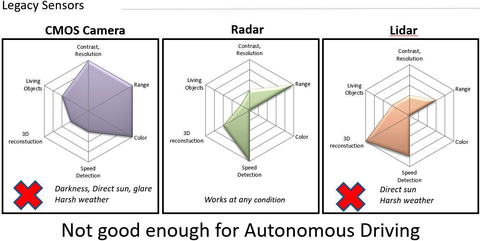

Current sensing technologies, like LiDAR, radar, and cameras, have perception problems that require a human driver to be ready to take control of the car at any moment. For this reason, the role of sensors has intensified both in quantity and in capability; to achieve Level 3-5 autonomous driving, vehicles need not only more sensors, but more effective sensors.

radar, and LiDAR cannot function in dynamic lighting or harsh weather conditions.

This article explores the current sensing capabilities from solutions such as radar and LiDAR, and why far-infrared (FIR) in a fusion solution is ultimately the solution to achieve Level-3, 4 and 5 autonomous driving.

Flaws of current sensors

Radar sensors can detect objects that are far away but cannot identify the object. Cameras, on the other hand, can more effectively determine what an object is, but only at a closer range. For these reasons, radar sensors and cameras are used in conjunction to provide autonomous vehicles with more complete detection and coverage: A radar sensor detects an object down the road, and a camera provides a more detailed picture of the object as it gets closer.

LiDAR (light detection and ranging) sensors have also become an essential component of autonomous vehicles’ detection and coverage capabilities. Like radar, LiDAR sends out signals and measures the distance to an object via a reflection of those signals, but it uses light waves or lasers as opposed to radar’s radio signals. While LiDAR sensors provide a wider field-of-view than radar’s and cameras’ more directional view, LiDAR is still cost prohibitive for mass market applications. Several companies are attempting to alleviate this issue by producing lower-cost LiDAR sensors, but these low-resolution LiDAR sensors cannot effectively detect obstacles that are far away, which spells reduced reaction for autonomous vehicles. Unfortunately, simply waiting for the price of LiDAR sensors to fall could slow the mass deployment of autonomous vehicles.

Besides visibility shortcomings, another principal obstacle to achieving Level-5 autonomous driving is environmental factors. Current sensing technologies are compromised to some degree in adverse weather conditions. For example, while radar can still detect faraway objects in heavy fog, haze, or at night, most cameras have near-field sight limitations that constrain their ability to see in foul weather or darkness. Sudden changes in lighting are also a problematic factor. For instance, as a vehicle enters or exits a tunnel, just as it takes a human driver’s eyes a few seconds to adjust to sudden darkness or bright lighting, cameras and LiDAR sensors can be momentarily blinded as well.

Today’s sight and perception solutions are also challenged by providing accurate image detection. While a camera may be able to successfully detect a person or an animal, its image-processing software may not be able to accurately distinguish between a real person or an animal and a picture of a person or an animal on advertisements, buildings, or buses.

What’s needed to bring full autonomy to market

Automakers and AV developers have plans to deploy fully autonomous vehicles on public roads by the beginning of 2020. To meet this deadline and produce any vehicle over Level-2 autonomy, AV developers need sensors that eliminate existing vision and perception weaknesses and guarantee complete detection and coverage of a vehicle’s surroundings 24/7, in any environment and condition. With less than perfect perception in current sensing technologies, human drivers always need to be ready to take control of the autonomous vehicle. And one of the main reasons humans still must take control of AVs is that their sensors fail amid adverse weather conditions.

Without improved sensor capability and accuracy providing safe and reliable operations in all weather conditions, mass market adoption of self-driving vehicles cannot be realized.

FIR is the Solution

A new type of sensor that employs far infrared (FIR) technology can fill the reliability gaps and perception problems left by other sensors. FIR has been used for decades in defense, security, firefighting, and construction, making it a mature and proven technology. Now, the technology is being used to help make full vehicle autonomy possible.

A FIR-based camera uses far infrared light waves to detect differences in heat (thermal radiation) naturally emitted by objects and converts this data into an image. As well as capturing the temperature of an object or material, a FIR camera captures an object’s emissivity—how effectively it emits heat. Since every object has a different emissivity, this allows an FIR camera to sense any object in its path. With this information, the camera can create a visual painting of the roadway to operate independently and safely.

segmentation and providing an accurate analysis of the vehicle’s surroundings.

Why FIR beats other perception solutions

FIR technology is necessary for the deployment and mass market adoption of autonomous vehicles because it is the only sensor capable of providing the complete and reliable coverage needed to make AVs safe. Other optical sensors used on cars only capture images that are visible to the human eye. But FIR cameras scan the infrared spectrum just above visible light, allowing them to detect objects that may not otherwise be perceptible to a camera, radar, or LiDAR. Moreover, unlike radar and LiDAR sensors that must transmit and receive signals, a FIR camera only collects signals; this makes it a “passive” technology. With no moving parts, a FIR camera simply senses signals from objects radiating heat.

shows objects undetected by current sensing technology are visible with a FIR solution.

There are currently three leading FIR sensor companies: Autoliv, FLIR systems and AdaSky. AdaSky is an Israeli startup that recently developed Viper, a high-resolution thermal camera that passively collects FIR signals, converts it to a high-resolution VGA video, and applies deep-learning computer vision algorithms to sense and analyze its surroundings.

With this advanced technology, FIR cameras can overcome the obstacles of complicated weather and lighting conditions from which all other sensors suffer. In comparison, today’s sensing technologies are limited. Even working together, current sensors cannot provide total or accurate coverage of a vehicle’s surroundings in every situation. Only FIR sensors generate a new layer of information originating from a different band of electromagnetic spectrum, significantly increasing performance for classification, identification, and detection of objects and of vehicle surroundings, both at near and far range.

By creating a visual representation of the vehicle’s surroundings, FIR fills the gaps left by other sensors to produce total detection and coverage in any weather condition and in any environment, whether it be urban, rural, or highway driving, or a combination of all three. For example, on the highway, it’s crucial to have long-ranging sensing so that if an object is detected, there is ample time for the vehicle to make the decision to stop, even as it travels at high speeds.

In urban areas, having a wider field-of-view is prioritized to be able to detect pedestrians and cyclists on the sidewalk and at crosswalks. While FIR is needed for Level-3 autonomous solutions, it is an essential enabler to Level-4 and up. To achieve Level-5 autonomy, AV developers anticipate that each vehicle should be equipped with several FIR cameras to enable wide coverage and a comprehensive understanding of its surroundings. Besides satisfying general safety needs, automakers favor the use of multiple FIR sensors, because the U.S. Department of Transportation’s Federal Automated Vehicles policy requires redundancy for certain critical AV systems, and most OEM and tier-ones are gearing up to use multiple sensors and other components as fail-safe measures.

Automakers’ goal of deploying autonomous vehicles on public roads by the beginning of the next decade can only be achieved if vehicles gain the ability to operate safely and reliably without the monitored control of a human driver, which is not possible with current sensing technologies’ perception problems. FIR cameras are the only technology that can deliver complete classification, identification, and detection of a vehicle’s surroundings in any environment or weather condition and, ultimately, make the mass market adoption of fully autonomous vehicles a reality.

About the author

Yakov Shaharabani, CEO and Board Member for AdaSky, is a strategic thinker with extensive experience as a leader dealing with complex environments and highly tense situations. Much of Yakov’s leadership and strategic experience was gained in 34 years of service in the Israeli Air Force, from a young pilot, through the positions of a squadron leader and a base commander, up to the very senior positions, as one of the very few Generals in the Israeli Air Force. Yakov is the founder of SNH Strategies LTD, a company focusing on strategic consulting and strategic leadership education. He earned his B.S with honors in economics and computer sciences, and M.A. in National Resource Strategy (Cum Laude), The National Defense University (NDU), Washington D.C.