When Seeing Becomes Insightful – Artificial Intelligence in Autonomous Driving

by Jeff VanWashenova & Árpád Takács

The development of self-driving technology has experienced a major paradigm shift in the past few years. While Advanced Driver Assistance Systems (ADAS) still play an important role in Level 1–3 highly automated vehicles (HAV), the challenges in reaching full autonomous capability may be too substantial for gradual evolution of today’s active safety features into full autonomy. Meeting these challenges will require new approaches in the hardware, software and algorithms currently being used in today’s active safety systems. For example, controlling these vehicles with classical computer algorithms and if/then rules is extremely difficult, if not completely impossible. Artificial intelligence (AI) and machine learning will be critical in opening up new possibilities in active safety and can help enable intelligent, scalable and adaptive system that are fully autonomous.

In addition to the differences at a computational level between HAV and fully autonomous vehicles, there is also divergence in vehicle architecture. For fully autonomous vehicles, the goal is to create a single, integrated, low power and high-performance central decision unit, which perceives and processes the environment, makes decisions and controls the vehicle.

For autonomous vehicles, the centralized processor is responsible for vision, radar, LiDAR and vehicle control systems.

The Ecosystem of Self-Driving

There is much debate on choosing an approach for self-driving car design, and evolution of technology makes this an even more difficult task. Regardless which one of the three major “schools” of self-driving we aim for – camera, radar or LiDAR based technologies – the software can be categorized into four hierarchic layers: recognition, location, motion (including trajectory planning and decision making) and control.

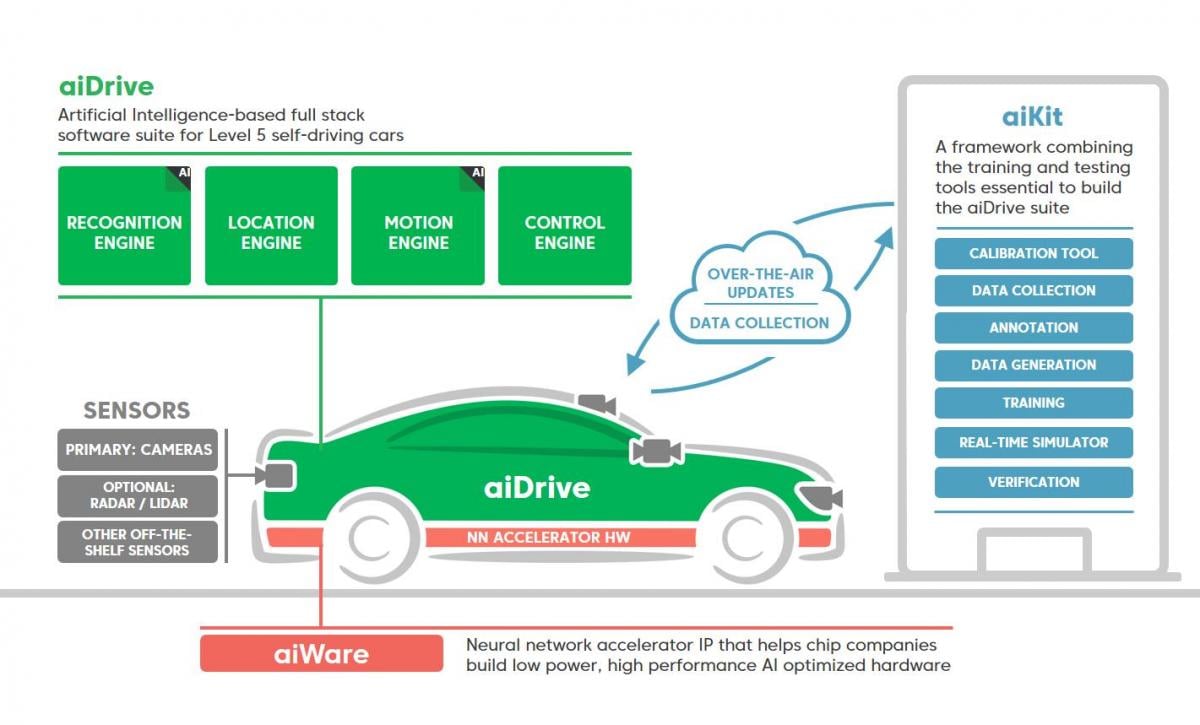

Figure 1: The AImotive ecosystem of self-driving

1. Recognition engine: Responsible for perceiving and understanding the environment. Processes data from the traditional sensors such as a camera, CAN-bus data, and custom sensors. AI-based object detection and classification provide a list of surrounding objects by category, orientation, dimensions and distance.

Figure 2: Artificial Intelligence Based, mono-camera inference

2. Location engine: Enhances conventional map data with 3D landmark information to ensure precise self-localization and navigation. Raw GPS data alone lacks accuracy for reliable positioning of the car and differential GPS (DGPS) also has limited performance in situations like urban canyons. While HD maps give a statistically good solution for this problem, rural areas provide a challenge for full coverage of this technology. Alternatively, landmark information, feature-based localization and detailed lane information can improve performance. Detecting drivable surface, free space detection, and lane information are critical functions of the location engine.

3. Motion engine: Takes positioning and navigation output and combines it with the predicted state of the surroundings to decide the right trajectory for the vehicle. Decision making based on the perceived environment is a challenging task even for human drivers. Deep learning techniques are utilized to predict tracking and behavior of region specific object classes. The predicted environment influences the free space calculations and thus, the desired driving path and possible trajectories.

4. Control engine: Responsible for taking the outputs of the system’s other engines and controlling the vehicle via low level actuation such as steering, breaking, and accelerations. The control engine transforms the self-driving car into a robotic system that needs to be ready for the execution of the desired, yet ever changing trajectory. The vehicle’s dynamic behavior, which directly depends from the road quality in terms of smoothness, friction and slopes, extends the requirements of free space detection, while control methods strongly rely on AI-based approaches.

Figure 3: Aggregate: Recognition, Location, Motion, Control

The Role of Free Space Detection

Free space detection is a critical aspect in self-driving systems and is pivotal in determining control of autonomous vehicles. Researchers around the world are developing the layers and tools necessary to have free space detection integrated in Level 4–5, fully automated autonomous systems. The quality and speed of the free space detected is highly impacted by the approach used to calculate. Neural Network-based free space detection can be integrated to the recognition-layer, general object detection and classification layers. Functions like pedestrian and vehicle detection utilize deep convolutional neural networks (CNNs), while reliable road surface segmentation can be achieved by using only a couple of layers of fully convolutional networks, with alternating CONV–POOL layers. As vehicle localization and motion planning are also relying on this detection output, dedicating hardware to accelerate this functionality can improve the throughput and performance in detecting free space.

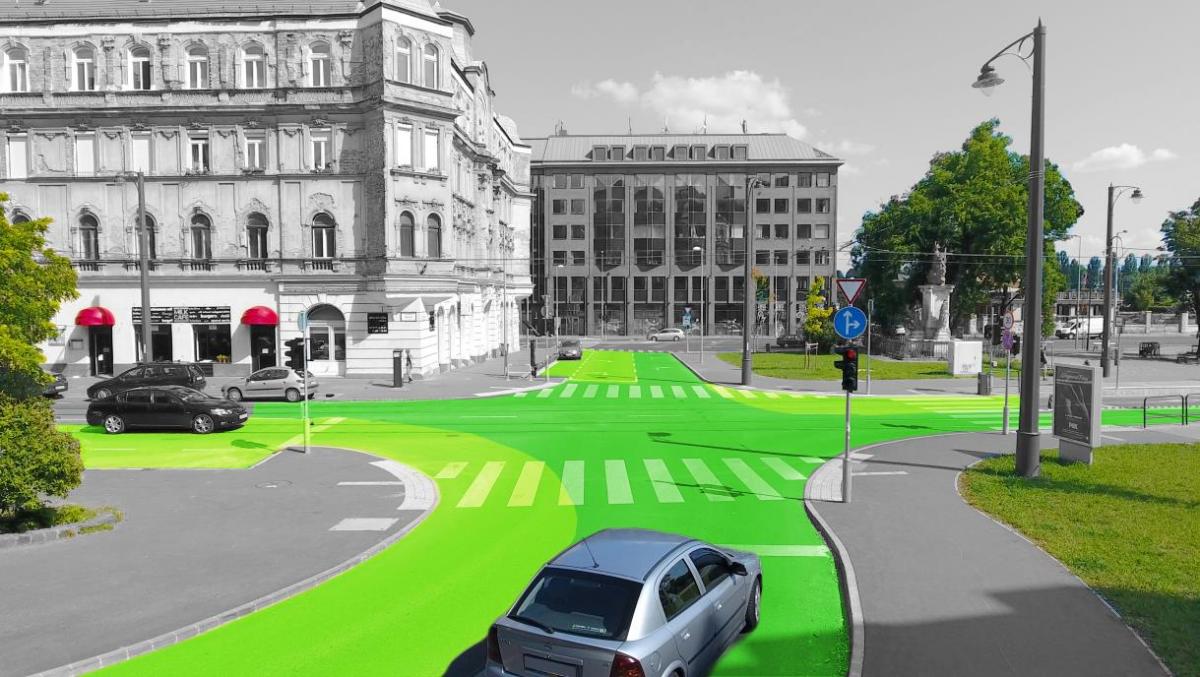

Figure 4: Free space, as human drivers see it

Analyzing the components of autonomous driving in terms of free space detection, we all look at the system holistically and strive for the integration of tightly coupled algorithm modules with components that can be implemented efficiently. On the road to autonomous cars, there will be many challenges without a clear-cut answer, but as we travel further, artificial intelligence based systems will be a solid foundation in realizing full automation.

Learn More

CEVA and AIMotive have collaborated on a joint implementation of a free space detection demo utilizing AIMotive Artificial based intelligence software and CEVA-XM6 vision processor. To learn more about intelligent solutions for highly automated vehicles and autonomous driving, view the webinar: “Challenges of Vision Based Autonomous Driving & Facilitation of An Embedded Neural Network Platform”.

About the Authors

Árpád Takács is an AI researcher and outreach scientist at AImotive. He received his mechatronics and mechanical engineering modeling degree from the Budapest University of Technology and Economics. His fields of expertise are analytical mechanics, control engineering, surgical robotics and machine learning.

Jeff VanWashenova is the Director of Automotive Market Segment for CEVA. Jeff is utilizing his fifteen years of experience in the automotive industry to expand CEVA’s imaging & vision product line into a variety of automotive applications. He holds a BS in Electrical Engineering and a MBA from Michigan State.